Introduction

Last month I was at dataMinds Connect 2024, and I attended a fantastic talk by Databricks Senior Solution Architects Liping Huang and Marius Panga on Cost Management in Databricks. This was of particular interest to me, as I recently implemented the built-in Cost Management dashboard for a client, so I was keen to hear about any developments and the roadmap for new features.

Components of Databricks Cost

Costs within Databricks can come from multiple places, and Databricks call these “Billing Origin Products”. The full list of these are:

| Billing Origin Product | Description |

|---|---|

| JOBS | Costs associated with running jobs on Databricks. |

| DLT | Delta Live Tables, a managed service for building and managing data pipelines. |

| SQL | Costs related to SQL Warehouses and SQL Serverless. |

| ALL_PURPOSE | General-purpose compute resources. |

| MODEL_SERVING | Serving machine learning models. |

| INTERACTIVE | Interactive clusters used for development and exploration. |

| MANAGED_STORAGE | Managed storage services. |

| VECTOR_SEARCH | Costs related to vector search capabilities. |

| LAKEHOUSE_MONIROTING | Monitoring and observability tools for the Lakehouse platform. |

| PREDICTIVE_OPTIMIZATION | Services for predictive optimisation tasks. |

| ONLINE_TABLES | Costs associated with online tables. |

| FOUNDATION_MODEL_TRAINING | Training foundation models. |

| NETWORKING | Costs associated with networking, such as data transfer between regions or services. |

Databricks has created a unit of measurement for processing power called DBU (Databricks Unit), and everything you pay for in Databricks is measured in DBUs. This means in order to manage your cost, you must understand your usage. Converting your usage into cost can be tricky, and is different depending on the service. This is where the Databricks system tables come into play.

Databricks System Tables

If you come from a SQL Server world, then system tables will be familiar to you. If not, system tables are just a collection of system-provided tables containing metadata such as table and column information for your data, audit logs, data lineage, and (the one that’s important for this discussion) billing, usage and pricing information.

In a Unity Catalog enabled Databricks workspace, these can be found in the system catalog under various schemas. The usage and list_price tables can be found under the billing schema.

Most system tables are not enabled by default, and may need to be enabled by a Databricks Account Admin. However, luckily for us, the billing schema is enabled by default. You may still need to be giving access to the system catalog, or to the billing schema directly, before you can utilise it.

Whilst having access to these system tables is very useful, it can be quite complicated to relate them all together to obtain meaningful information and insight. This is where the cost management dashboard comes in.

Cost and Usage Dashboard

Enabling the Dashboard

Databricks released the Cost and Usage Dashboard in Public Preview in September 2024, however it was previously available through dbdemos. Now though, it can be enabled in the Databricks Account Console via the “Usage” pane, and it can be enabled at either an Account or individual Workspace level. More details on how to enable the dashboard can be found in the Databricks documentation here.

Dashboard Access

This is a Databricks “AI/BI” dashboard, and lives inside the Databricks ecosystem. Users can be granted access to the dashboard object, however for security reasons they must also have Read-level permissions on the underlying billing tables before they will be able to see anything.

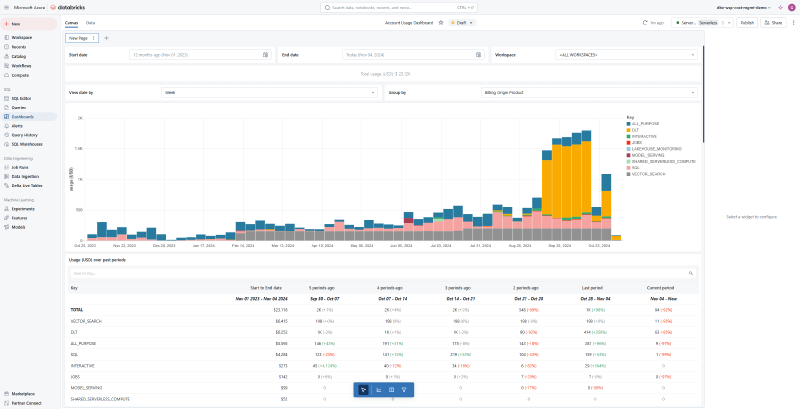

Dashboard Overview

The dashboard contains a few high level visuals, which show things such as:

- Usage breakdown by billing origin product (these are the services we discussed earlier)

- Usage breakdown by SKU name (more granular than above, e.g. are you using Photon)

- Usage analysis based on custom tags (defining your own tagging approach enables you to have heavily customised usage insights)

- Usage analysis of the most expensive usage

It converts all of the usage metrics above into USD using the list price. This is important to note, because for many, the list price will not be the actual cost. Many Databricks customers will have an account price based on specific usage patterns, commitments, or agreements with Databricks. It’s fair to assume that the list price will be the maximum cost, however.

Behind The Scenes

What’s really cool about the Cost and Usage Dashboard, is that it provides you will the underlying data model and the SQL queries which created it. This enables you to reuse and extend these queries for further exploratory analysis. The dashboard itself is customisable, allowing you to edit the visuals and add additional slicers, but you are also able to edit and even extend the underlying data model, to fully meet your reporting needs.

Power BI Template

Databricks recognise that for many organisations, Databricks AI/BI is not their primary reporting tool. For this reason, Liping and Marius have developed a Power BI Template (PBIT), which uses a slightly extended version of the previously discussed Cost and Usage Dashboard data model. You can find the PBIT in Github here. This template makes use of PBI features like the decision tree, to enable you to easily drill down into your usage data and perform root cause analysis. Again, you can also make use of the custom tags here.

I connected the template to my SQL endpoint and was able to get the dashboard working within seconds:

It’s worth noting that the data model behind the PBIT also needs the compute system schema enabling, which is not enabled by default like the billing schema.

Roadmap

There are some cool features which have been announced on the roadmap for this solution. For the PBIT, a newer version will be available soon with the following inclusions:

- Long running jobs

- Long running queries

- Infrastructure costs

- Most frequent run_as

- Tag health checks

In order to make some of the above features available for reporting on within the PBIT, some additional system tables are required inside Databricks first. These tables, plus some others, are also on the Databricks roadmap:

billing.account_pricebilling.cloud_infra_costcompute.warehousescluster.cluster_events

For me, there are two exciting tables in that list.

billing.cloud_infra_cost

Presently, you can only track the DBU cost associated with your Databricks compute. This does not include the underlying infrastructure costs you also have to pay to Azure for the virtual machines under the hood. When the billing.cloud_infra_cost system table is made GA, we will be able to monitor and track the total cost associated with our compute, across both Databricks and Microsoft, giving a more complete picture of your costs.

billing.account_price

The current Cost and Usage Dashboard makes use of the billing.list_price system table, which does not necessarily accurately represent your Databricks compute prices. When the billing.account_price system table is made GA, we will be able to get an accurate price which takes into account any discounts we may have due to committing to a certain amount of compute consumption. This enables more accurate cost management.

Budgets: Private Preview

Last but not least, I wanted to touch on another exciting Private Preview feature which aids with Cost Management: Budgets.

Databricks Budgets can be set up at account level under the Usage section; the same area where you configure the Cost and Usage Management Dashboard. Here, you can create Budgets for specific workspaces (you can include multiple workspaces within a budget), and you can even use custom tags to refine your Budgets further. As well as this, you can also provide a comma separated list of email address to be alerted when the Budget is exceeded.

Once the Budget has been set up, you can monitor your Budgets from the account console:

Conclusion

In summary, Databricks have released some really interesting features this year to better enable you to monitor cost, both at a workspace and an account level. Plus, if you’re a Power BI User, you can make use of the cool Power BI Template provided as well. And with some exciting new features on the roadmap, it’s only going to get better!